In-depth description of configuration options, file formats, and REST API interfaces.

This is the multi-page printable view of this section. Click here to print.

Reference

- 1: cc-backend

- 1.1: Command Line

- 1.2: Configuration

- 1.3: Environment

- 1.4: REST API

- 1.5: Authentication Handbook

- 1.6: Job Archive Handbook

- 1.7: Schemas

- 1.7.1: Application Config Schema

- 1.7.2: Cluster Schema

- 1.7.3: Job Data Schema

- 1.7.4: Job Statistics Schema

- 1.7.5: Unit Schema

- 1.7.6: Job Archive Metadata Schema

- 1.7.7: Job Archive Metrics Data Schema

- 1.8: Tools

- 1.8.1: archive-manager

- 1.8.2: archive-migration

- 1.8.3: convert-pem-pubkey

- 1.8.4: gen-keypair

- 1.8.5: grepCCLog.pl

- 1.8.6: Metric Generator Script

- 2: cc-metric-store

- 2.1: Command Line

- 2.2: Configuration

- 2.3: Metric Store REST API

- 3: cc-metric-collector

- 3.1: Configuration

- 3.2: Installation

- 3.3: Usage

- 3.4: Metric Router

- 3.5: Collectors

- 4: cc-slurm-adapter

- 4.1: Installation

- 4.2: cc-slurm-adapter Configuration

- 4.3: Daemon Setup

- 4.4: Prolog/Epilog Hooks

- 4.5: Usage

- 4.6: Troubleshooting

- 4.7: Architecture

- 4.8: API Integration

1 - cc-backend

Reference information regarding the primary ClusterCockpit component “cc-backend” (GitHub Repo).

1.1 - Command Line

This page describes the command line options for the cc-backend executable.

-add-user <username>:[admin,support,manager,api,user]:<password>

Function: Add a new user. Only one role can be assigned.

Example: -add-user abcduser:manager:somepass

-apply-tags

Function: Run taggers on all completed jobs and exit.

-config <path>

Function: Specify alternative path to config.json.

Default: ./config.json

Example: -config ./configfiles/configuration.json

-del-user <username>

Function: Remove an existing user.

Example: -del-user abcduser

-dev

Function: Enable development components: GraphQL Playground and Swagger UI.

-force-db

Function: Force database version, clear dirty flag and exit.

-gops

Function: Listen via github.com/google/gops/agent (for debugging).

-import-job <path-to-meta.json>:<path-to-data.json>, ...

Function: Import a job. Argument format: <path-to-meta.json>:<path-to-data.json>,...

Example: -import-job ./to-import/job1-meta.json:./to-import/job1-data.json,./to-import/job2-meta.json:./to-import/job2-data.json

-init

Function: Setup var directory, initialize sqlite database file, config.json and .env.

-init-db

Function: Go through job-archive and re-initialize the job, tag, and

jobtag tables (all running jobs will be lost!).

-jwt <username>

Function: Generate and print a JWT for the user specified by its username.

Example: -jwt abcduser

-logdate

Function: Set this flag to add date and time to log messages.

-loglevel <level>

Function: Sets the logging level.

Arguments: debug | info | warn | err | crit

Default: warn

Example: -loglevel debug

-migrate-db

Function: Migrate database to supported version and exit.

-revert-db

Function: Migrate database to previous version and exit.

-server

Function: Start a server, continues listening on port after initialization and argument handling.

-sync-ldap

Function: Sync the hpc_user table with ldap.

-version

Function: Show version information and exit.

1.2 - Configuration

cc-backend requires a JSON configuration file. The configuration files is

structured into components. Every component is configured either in a separate

JSON object or using a separate file. When a section is put in a separate file

the section key has to have a -file suffix.

Example:

"auth-file": "./var/auth.json"

To override the default config file path, specify the location of a JSON

configuration file with the -config <file path> command line option.

Configuration Options

Section main

Section must exist.

addr: Type string (Optional). Address where the http (or https) server will listen on (for example: ‘0.0.0.0:80’). Defaultlocalhost:8080.api-allowed-ips: Type array of strings (Optional). IPv4 addresses from which the secured administrator API endpoint functions/api/*can be reached. Default: No restriction. The previous*wildcard is still supported but obsolete.user: Type string (Optional). Drop root permissions once .env was read and the port was taken. Only applicable if using privileged port.group: Type string. Drop root permissions once .env was read and the port was taken. Only applicable if using privileged port.disable-authentication: Type bool (Optional). Disable authentication (for everything: API, Web-UI, …). Defaultfalse.embed-static-files: Type bool (Optional). If all files inweb/frontend/publicshould be served from within the binary itself (they are embedded) or not. Defaulttrue.static-files: Type string (Optional). Folder where static assets can be found, ifembed-static-filesisfalse. No default.db: Type string (Optional). The db file path. Default:./var/job.db.enable-job-taggers: Type bool (Optional). Enable automatic job taggers for application and job class detection. Requires to provide tagger rules. Default:false.validate: Type bool (Optional). Validate all input JSON documents against JSON schema. Default:false.session-max-age: Type string (Optional). Specifies for how long a session shall be valid as a string parsable by time.ParseDuration(). If 0 or empty, the session/token does not expire! Default168h.https-cert-fileandhttps-key-file: Type string (Optional). If both those options are not empty, use HTTPS using those certificates. Default: No HTTPS.redirect-http-to: Type string (Optional). If not the empty string andaddrdoes not end in “:80”, redirect every request incoming at port 80 to that url.stop-jobs-exceeding-walltime: Type int (Optional). If not zero, automatically mark jobs as stopped running X seconds longer than their walltime. Only applies if walltime is set for job. Default0.short-running-jobs-duration: Type int (Optional). Do not show running jobs shorter than X seconds. Default300.emission-constant: Type integer (Optional). Energy Mix CO2 Emission Constant [g/kWh]. If entered, UI displays estimated CO2 emission for job based on jobs’ total Energy.resampling: Type object (Optional). If configured, will enable dynamic downsampling of metric data using the configured values.minimum-points: Type integer. This option allows user to specify the minimum points required for resampling; Example: 600. If minimum-points: 600, assuming frequency of 60 seconds per sample, then a resampling would trigger only for jobs > 10 hours (600 / 60 = 10).resolutions: Type array [integer]. Array of resampling target resolutions, in seconds; Example: [600,300,60].trigger: Type integer. Trigger next zoom level at less than this many visible datapoints.

machine-state-dir: Type string (Optional). Where to store MachineState files. TODO: Explain in more detail!api-subjects: Type object (Optional). NATS subjects configuration for subscribing to job and node events. Default: No NATS API.subject-job-event: Type string. NATS subject for job events (start_job, stop_job).subject-node-state: Type string. NATS subject for node state updates.

Section nats

Section is optional.

address: Type string. Address of the NATS server (e.g.,nats://localhost:4222).username: Type string (Optional). Username for NATS authentication.password: Type string (Optional). Password for NATS authentication (optional).creds-file-path: Type string (Optional). Path to NATS credentials file for authentication (optional).

Section cron

Section must exist.

commit-job-worker: Type string. Frequency of commit job worker. Default:2mduration-worker: Type string. Frequency of duration worker. Default:5mfootprint-worker: Type string. Frequency of footprint. Default:10m

Section archive

Section is optional. If section is not provided, the default is kind set to

file with path set to ./var/job-archive.

kind: Type string (required). Set archive backend. Supported values:file,s3,sqlite.path: Type string (Optional). Path to the job-archive. Default:./var/job-archive.compression: Type integer (Optional). Setup automatic compression for jobs older than number of days.retention: Type object (Optional). Enable retention policy for archive and database.policy: Type string (required). Retention policy. Possible values none, delete, move.include-db: Type bool (Optional). Also remove jobs from database. Default:true.age: Type integer (Optional). Act on jobs with startTime older than age (in days). Default: 7 days.location: Type string (Optional). The target directory for retention. Only applicable for retention policy move. Only applies for move policy.

Section auth

Section must exist.

jwts: Type object (required). For JWT Authentication.max-age: Type string (required). Configure how long a token is valid. As string parsable by time.ParseDuration().cookie-name: Type string (Optional). Cookie that should be checked for a JWT token.vaidate-user: Type bool (Optional). Deny login for users not in database (but defined in JWT). Overwrite roles in JWT with database roles.trusted-issuer: Type string (Optional). Issuer that should be accepted when validating external JWTs.sync-user-on-login: Type bool (Optional). Add non-existent user to DB at login attempt with values provided in JWT.update-user-on-login: Type bool (Optional). Update existent user in DB at login attempt with values provided in JWT. Currently only the person name is updated.

ldap: Type object (Optional). For LDAP Authentication and user synchronisation. Defaultnil.url: Type string (required). URL of LDAP directory server.user-base: Type string (required). Base DN of user tree root.search-dn: Type string (required). DN for authenticating LDAP admin account with general read rights.user-bind: Type string (required). Expression used to authenticate users via LDAP bind. Must containuid={username}.user-filter: Type string (required). Filter to extract users for syncing.username-attr: Type string (Optional). Attribute with full user name. Defaults togecosif not provided.sync-interval: Type string (Optional). Interval used for syncing local user table with LDAP directory. Parsed using time.ParseDuration.sync-del-old-users: Type bool (Optional). Delete obsolete users in database.sync-user-on-login: Type bool (Optional). Add non-existent user to DB at login attempt if user exists in LDAP directory.

oidc: Type object (Optional). For OpenID Connect Authentication. Defaultnil.provider: Type string (required). OpenID Connect provider URL.sync-user-on-login: Type bool. Add non-existent user to DB at login attempt with values provided.update-user-on-login: Type bool. Update existent user in DB at login attempt with values provided. Currently only the person name is updated.

Section metric-store

Section must exist.

retention-in-memory: Type string (required). Keep the metrics within memory for given time interval. Retention for X hours, then the metrics would be freed. Buffers that are still used by running jobs will be kept.memory-cap: Type integer (required). If memory used exceeds value in GB, buffers still used by long running jobs will be freed.num-workers: Type integer (Optional). Number of concurrent workers for checkpoint and archive operations. Default: If not set defaults tomin(runtime.NumCPU()/2+1, 10)checkpoints: Type object (required). Configuration for checkpointing the metrics buffersfile-format: Type string (Optional). Format to use for checkpoint files. Can be JSON or Avro. Default: Avro.directory: Type string (Optional). Path in which the checkpoints should be placed. Default:./var/checkpoints.

cleanup: Type object (Optional). Configuration for the cleanup process. If not set themodeisdeletewithintervalset to theretention-in-memoryinterval.mode: Type string (Optional). The mode for cleanup. Can bedeleteorarchive. Default:delete.interval: Type string (Optional). Interval at which the cleanup runs.directory: Type string (required if mode isarchive). Directory where to put the archive files.

nats-subscriptions: Type array (Optional). List of NATS subjects the metric store should subscribe to. Items are of type object with the following attributes:subscribe-to: Type string (required). NATS subject to subscribe to.cluster-tag: Type string (Optional). Allow lines without a cluster tag, use this as default.

Section ui

The ui section specifies defaults for the web user interface. The defaults

which metrics to show in different views can be overwritten per cluster or

subcluster.

job-list: Type object (Optional). Job list defaults. Applies to user and jobs views.use-paging: Type bool (Optional). If classic paging is used instead of continuous scrolling by default.show-footprint: Type bool (Optional). If footprint bars are shown as first column by default.

node-list: Type object (Optional). Node list defaults. Applies to node list view.use-paging: Type bool (Optional). If classic paging is used instead of continuous scrolling by default.

job-view: Type object (Optional). Job view defaults.show-polar-plot: Type bool (Optional). If the job metric footprints polar plot is shown by default.show-footprint: Type bool (Optional). If the annotated job metric footprint bars are shown by default.show-roofline: Type bool (Optional). If the job roofline plot is shown by default.show-stat-table: Type bool (Optional). If the job metric statistics table is shown by default.

metric-config: Type object (Optional). Global initial metric selections for primary views of all clusters.job-list-metrics: Type array [string] (Optional). Initial metrics shown for new users in job lists (User and jobs view).job-view-plot-metrics: Type array [string] (Optional). Initial metrics shown for new users as job view metric plots.job-view-table-metrics: Type array [string] (Optional). Initial metrics shown for new users in job view statistics table.clusters: Type array of objects (Optional). Overrides for global defaults by cluster and subcluster.name: Type string (required). The name of the cluster.job-list-metrics: Type array [string] (Optional). Initial metrics shown for new users in job lists (User and jobs view) for this cluster.job-view-plot-metrics: Type array [string] (Optional). Initial metrics shown for new users as job view timeplots for this cluster.job-view-table-metrics: Type array [string] (Optional). Initial metrics shown for new users in job view statistics table for this cluster.sub-clusters: Type array of objects (Optional). The array of overrides per subcluster.name: Type string (required). The name of the subcluster.job-list-metrics: Type array [string] (Optional). Initial metrics shown for new users in job lists (User and jobs view) for subcluster.job-view-plot-metrics: Type array [string] (Optional). Initial metrics shown for new users as job view timeplots for subcluster.job-view-table-metrics: Type array [string] (Optional). Initial metrics shown for new users in job view statistics table for subcluster.

plot-configuration: Type object (Optional). Initial settings for plot render options.color-background: Type bool (Optional). If the metric plot backgrounds are initially colored by threshold limits.plots-per-row: Type integer (Optional). How many plots are initially rendered per row. Applies to job, single node, and analysis views.line-width: Type integer (Optional). Initial thickness of rendered plotlines. Applies to metric plot, job compare plot and roofline.color-scheme: Type array [string] (Optional). Initial colorScheme to be used for metric plots.

1.3 - Environment

All security-related configurations, e.g. keys and passwords, are set using

environment variables. It is supported to set these by means of a .env file in

the project root.

Environment Variables

JWT_PUBLIC_KEYandJWT_PRIVATE_KEY: Base64 encoded Ed25519 keys used for JSON Web Token (JWT) authentication. You can generate your own keypair usinggo run ./tools/gen-keypair/. The release binaries also include thegen-keypairtool for x86-64. For more information, see the JWT documentation.SESSION_KEY: Some random bytes used as secret for cookie-based sessionsLDAP_ADMIN_PASSWORD: The LDAP admin user password (optional)CROSS_LOGIN_JWT_HS512_KEY: Used for token based logins via another authentication service (optional)OID_CLIENT_ID: OpenID connect client id (optional)OID_CLIENT_SECRET: OpenID connect client secret (optional)

Template .env file

Below is an example .env file.

Copy it as .env into the project root and adapt it for your needs.

# Base64 encoded Ed25519 keys (DO NOT USE THESE TWO IN PRODUCTION!)

# You can generate your own keypair using `go run tools/gen-keypair/main.go`

JWT_PUBLIC_KEY="kzfYrYy+TzpanWZHJ5qSdMj5uKUWgq74BWhQG6copP0="

JWT_PRIVATE_KEY="dtPC/6dWJFKZK7KZ78CvWuynylOmjBFyMsUWArwmodOTN9itjL5POlqdZkcnmpJ0yPm4pRaCrvgFaFAbpyik/Q=="

# Base64 encoded Ed25519 public key for accepting externally generated JWTs

# Keys in PEM format can be converted, see `tools/convert-pem-pubkey/Readme.md`

CROSS_LOGIN_JWT_PUBLIC_KEY=""

# Some random bytes used as secret for cookie-based sessions (DO NOT USE THIS ONE IN PRODUCTION)

SESSION_KEY="67d829bf61dc5f87a73fd814e2c9f629"

# Password for the ldap server (optional)

LDAP_ADMIN_PASSWORD="mashup"

1.4 - REST API

REST API Authorization

In ClusterCockpit JWTs are signed using a public/private key pair using ED25519.

Because tokens are signed using public/private key pairs, the signature also

certifies that only the party holding the private key is the one that signed it.

JWT tokens in ClusterCockpit are not encrypted, means all information is clear

text. Expiration of the generated tokens can be configured in config.json using

the max-age option in the jwts object. Example:

"jwts": {

"max-age": "168h"

},

The party that generates and signs JWT tokens has to be in possession of the

private key and any party that accepts JWT tokens must possess the public key to

validate it. cc-backed therefore requires both keys, the private one to

sign generated tokens and the public key to validate tokens that are provided by

REST API clients.

Generate ED25519 key pairs

We provide a tool as part of cc-backend to generate a ED25519 keypair.

The tool is called gen-keypair and provided as part of the release binaries.

You can easily build it yourself in the cc-backend source tree with:

go build tools/gen-keypair

To use it just call it without any arguments:

./gen-keypair

Usage of Swagger UI documentation

Swagger UI is a REST API documentation and testing framework. To use the Swagger UI for testing you have to run an instance of cc-backend on localhost (and use the default port 8080):

./cc-backend -server

You may want to start the demo as described here .

This Swagger UI is also available as part of cc-backend if you start it with

the dev option:

./cc-backend -server -dev

You may access it at this URL.

Swagger API Reference

Non-Interactive Documentation

This reference is rendered using theswaggerui plugin based on the original definition file found in the ClusterCockpit repository, but without a serving backend.This means that all interactivity (“Try It Out”) will not return actual data. However, a Curl call and a compiled Request URL will still be displayed, if an API endpoint is executed.Administrator API

Endpoints displayed here correspond to the administrator/api/ endpoints, but user-accessible /userapi/ endpoints are functionally identical. See these lists for information about accessibility.1.5 - Authentication Handbook

Introduction

cc-backend supports the following authentication methods:

- Local login with credentials stored in SQL database

- Login with authentication to a LDAP directory

- Authentication via JSON Web Token (JWT):

- With token provided in HTML request header

- With token provided in cookie

- Login via OpenID Connect (against a KeyCloak instance)

All above methods create a session cookie that is then used for subsequent authentication of requests. Multiple authentication methods can be configured at the same time. If LDAP is enabled it takes precedence over local authentication. The OpenID Connect method against a KeyCloak instance enables many more authentication methods using the ability of KeyCloak to act as an Identity Broker.

The REST API uses stateless authentication via a JWT token, which means that every requests must be authenticated.

General configuration options

All configuration is part of the cc-backend configuration file config.json.

All security sensitive options as passwords and tokens are passed in terms of

environment variables. cc-backend supports to read an .env file upon startup

and set the environment variables contained there.

Duration of session

Per default the maximum duration of a session is 7 days. To change this the

option session-max-age has to be set to a string that can be parsed by the

Golang time.ParseDuration() function.

For most use cases the largest unit h is the only relevant option.

Example:

"session-max-age": "24h",

To enable unlimited session duration set session-max-age either to 0 or empty

string.

LDAP authentication

Configuration

To enable LDAP authentication the following set of options are required as

attributes of the ldap JSON object:

url: URL of the LDAP directory server. This must be a complete URL including the protocol and not only the host name. Example:ldaps://ldsrv.mydomain.com.user_base: Base DN of user tree root. Example:ou=people,ou=users,dc=rz,dc=mydomain,dc=com.search_dn: DN for authenticating an LDAP admin account with general read rights. This is required for the sync on login and the sync options. Example:cn=monitoring,ou=adm,ou=profile,ou=manager,dc=rz,dc=mydomain,dc=comuser_bind: Expression used to authenticate users via LDAP bind. Must containuid={username}. Example:uid={username},ou=people,ou=users,dc=rz,dc=mydomain,dc=com.user_filter: Filter to extract users for syncing. Example:(&(objectclass=posixAccount)).

Optional configuration options are:

username_attr: Attribute with full user name. Defaults togecosif not provided.sync_interval: Interval used for syncing SQL user table with LDAP directory. Parsed using time.ParseDuration. The sync interval is always relative to the timecc-backendwas started. Example:24h.sync_del_old_users: Type boolean. Delete users in SQL database if not in LDAP directory anymore. This of course only applies to users that were added from LDAP.syncUserOnLogin: Type boolean. Add non-existent user to DB at login attempt if user exists in LDAP directory. This option enables that users can login at once after they are added to the LDAP directory.

The LDAP authentication method requires the environment variable

LDAP_ADMIN_PASSWORD for the search_dn account that is used to sync users.

Usage

If LDAP is configured it is the first authentication method that is tried if a

user logs in using the login form. A sync with the LDAP directory can also be

triggered from the command line using the flag -sync-ldap.

OpenID Connect authentication

Configuration

To enable OpenID Connect authentication the following set of options are

required below a top-level oicd key:

provider: The base URL of your OpenID Connect provider. Example:https://auth.example.com/realms/mycloud.

Full example:

"oidc": {

"provider": "https://auth.server.com:8080/realms/nhr-cloud"

},

Furthermore the following environment variables have to be set (in the .env

file):

OID_CLIENT_ID: Set this to the Client ID you configured in Keycloak.OID_CLIENT_SECRET: Set this to the Client ID secret available in you Keycloak Open ID Client configuration.

Required settings in KeyCloak

The OpenID Connect implementation was only tested against the KeyCloak provider.

Steps to setup KeyCloak:

Create a new realm. This will determine the provider URL.

Create a new OpenID Connect client

Set a Client ID, the Client ID secret is automatically generated and available at the

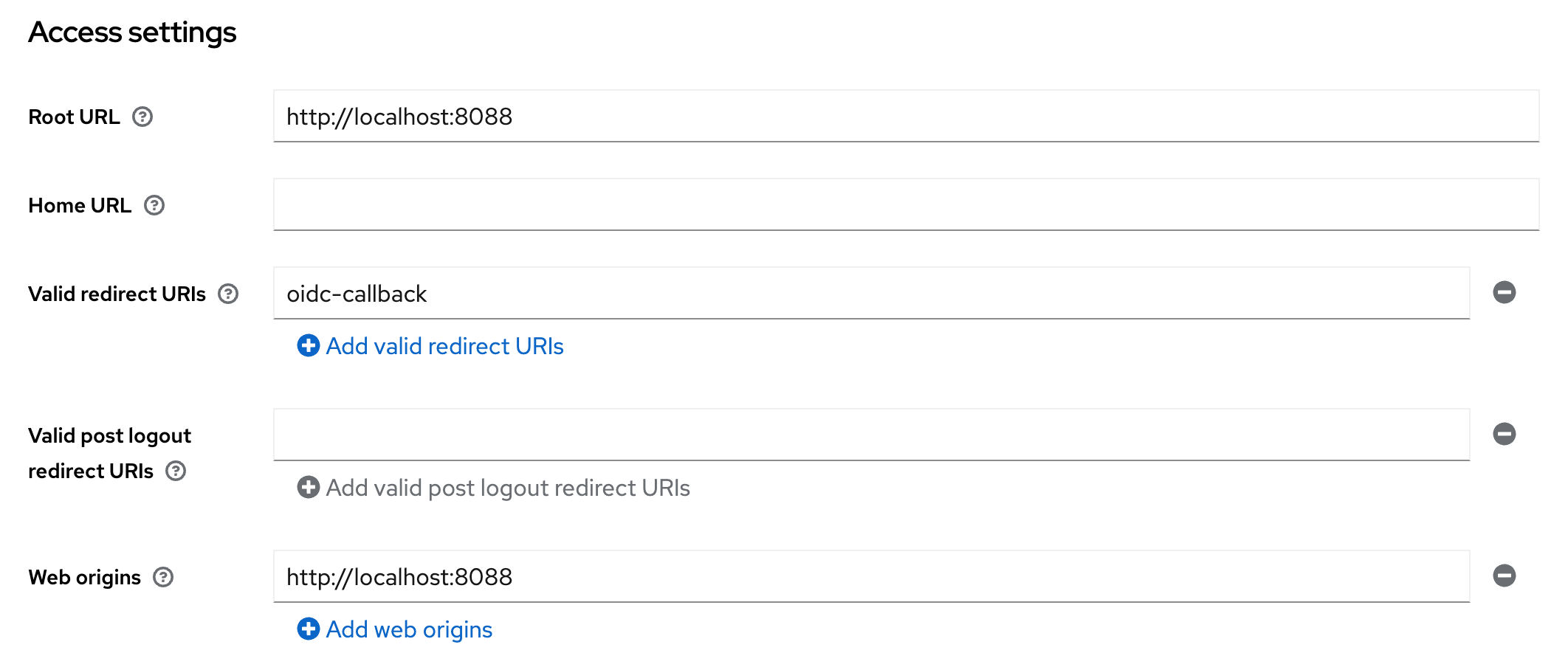

Credentialstab.For Access settings set:

Root URL: This is the base URL of your cc-backend instance.Valid redirect URLs: Set this tooidc-callback. Wildcards did not work for me.Web origins: Set this also to the base URL of your cc-backend instance.

Keycloak client Access settings

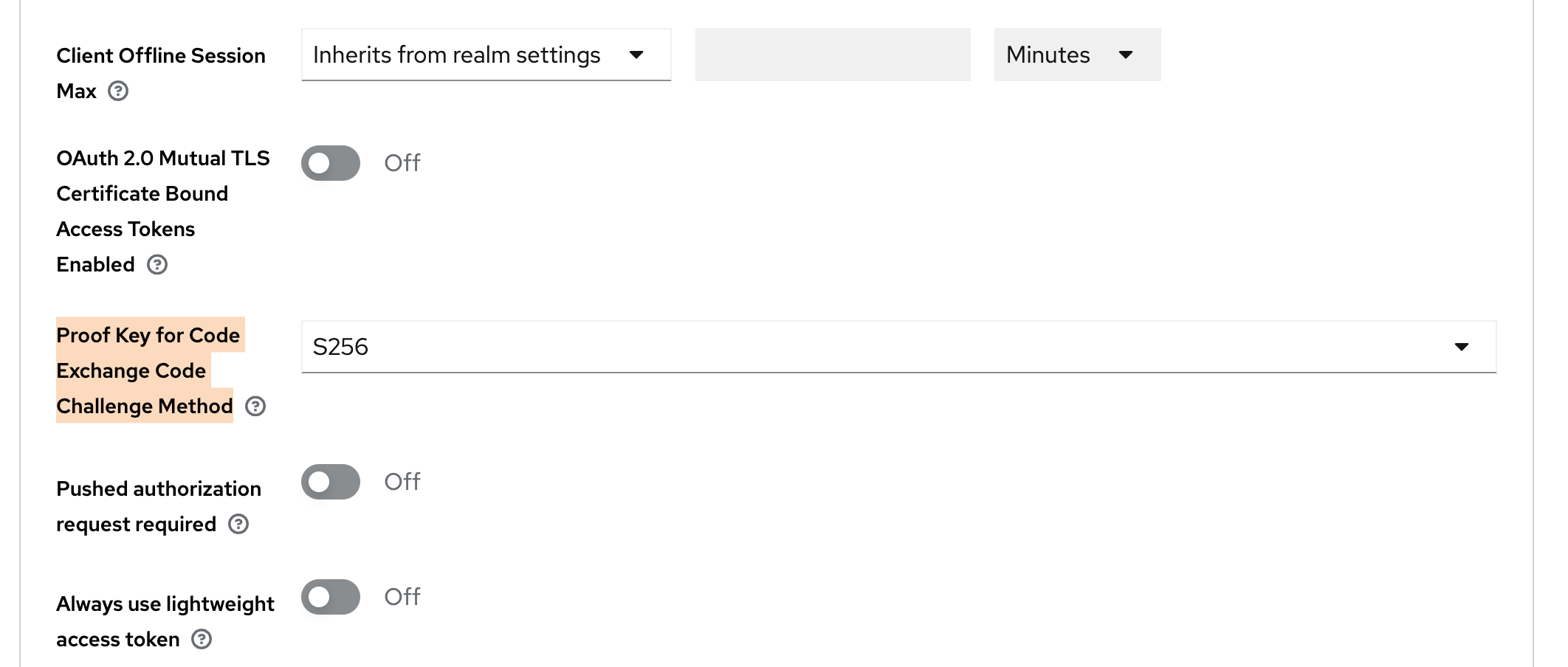

Enable PKCE:

- Click on Advanced tab. Further click on Advanced settings on the right side.

- Set the option

Proof Key for Code Exchange Code Challenge MethodtoS256.

Keycloak advanced client settings for PKCE

Everything else can be left to the default. Do not forget to create users in your realm before testing.

Usage

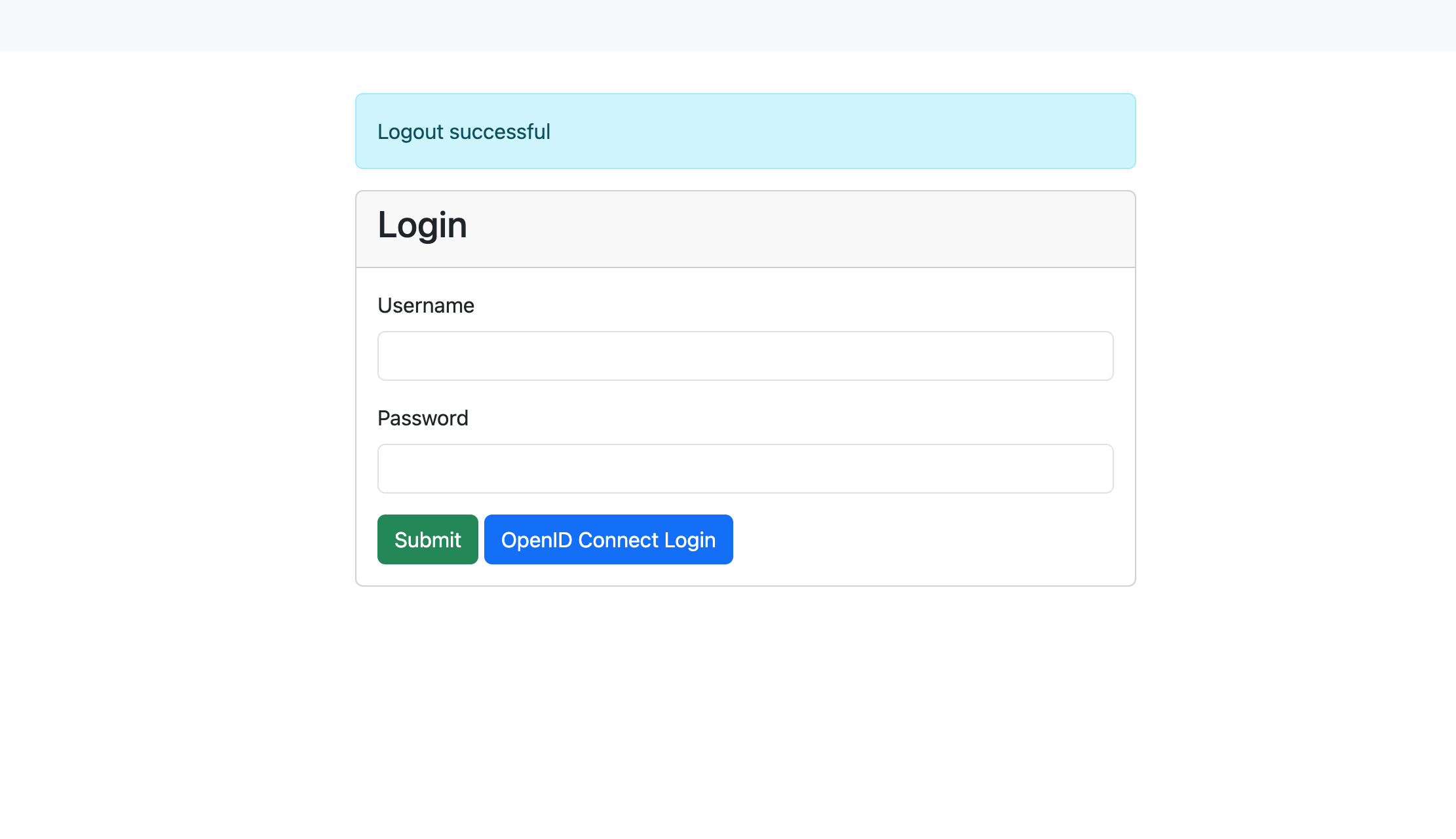

If the oicd config key is correctly set and the required environment variables

are available, an additional button for OpenID Connect Login is shown below the

login mask. If pressed this button will redirect to the OpenID Connect login.

Login mask with OpenID Connect enabled

Local authentication

No configuration is required for local authentication.

Usage

You can add an user on the command line using the flag -add-user:

./cc-backend -add-user <username>:<roles>:<password>

Example:

./cc-backend -add-user fritz:admin,api:myPass

Roles can be admin, support, manager, api, and user.

Users can be deleted using the flag -del-user:

./cc-backend -del-user fritz

Warning

The option-del-user as currently implemented will delete ALL users that

match the username independent of its origin. This means it will also delete

user records that were added from LDAP or JWT tokens.JWT token authentication

JSON web tokens are a standardized method for representing encoded claims securely between two parties. In ClusterCockpit they are used for authorization to use REST APIs as well as a method to delegate authentication to a third party. This section only describes JWT based authentication for initiating a user session.

Two variants exist:

- [1] Session Authenticator: Passes JWT token in the HTTP header Authorization using the Bearer prefix or using the query key login-token.

Example for Authorization header:

Authorization: Bearer S0VLU0UhIExFQ0tFUiEK

Example for query key used as form action in external application:

<form method="post" action="$CCROOT/jwt-login?login-token=S0VLU0UhIExFQ0tFUiEK" target="_blank">

<button type="submit">Access CC</button>

</form>

- [2] Cookie Session Authenticator: Reads the JWT token from a named cookie provided by the request, which is deleted after the session was successfully initiated. This is a more secure alternative to the standard header based solution.

JWT Configuration

- [0] Basic required configuration:

In order to enable JWT based transactions generally, the following has to be true:

- The

jwtsJSON object has to exist withinconfig.json, even if no other attribute is set within.- We recommend to set

max-ageattribute: Specifies for how long a JWT token shall be valid, defined as a string parsable bytime.ParseDuration(). - This will only affect JWTs generated by ClusterCockpit, e.g. for the use with REST-API endpoints.

- We recommend to set

In addition, the the following environment variables are used:

JWT_PRIVATE_KEY: The applications own private key to be used with JWT transactions. Required for cookie based logins and REST-API communication.JWT_PUBLIC: The applications own public key to be used with JWT transactions. Required for cookie based logins and REST-API communication.[1] Configuration for JWT Session Authenticator:

Compatible signing methods are: HS256, HS512

Only a shared (symmetric) key saved as environment variable CROSS_LOGIN_JWT_HS512_KEY is required.

- [2] Configuration for JWT Cookie Session Authenticator:

Tokens are signed with: Ed25519/EdDSA

To enable JWT authentication via cookie the following set of options are required as attributes of the jwts JSON object:

cookieName(String): Specifies which cookie should be checked for a JWT token (if no authorization header is present)trustedIssuer(String): Specifies which issuer should be accepted when validating external JWTs (iss-claim)

In addition, the Cookie Session Authenticator method requires the following environment variable:

CROSS_LOGIN_JWT_PUBLIC_KEY: Primary public key for this method, validates identity of tokens received fromtrustedIssuerand must therefore match accordingly.[3] Optional configuration attributes of the

jwtsJSON object, valid for both [1] and [2], are:validateUser(Bool): Load user by username encoded insub-claim from database, including roles, denying login if not matched in database. Ignores all other claims. By design not combinable with bothsyncUserOnLoginand/orupdateUserOnLoginoptions.syncUserOnLogin(Bool): If user encoded in token does not exist in database, add a new user entry. Does not update user on recurring JWT logins.updateUserOnLogin(Bool): If user encoded in token does exist in database, update the user entry with all encoded information. Does not add users on first-time JWT login.

JWT Usage

- [1] Usage for JWT Session Authenticator:

The endpoint for initiating JWT logins in ClusterCockpit is /jwt-login

For login with JWT Header, the header has to include the Authorization: Bearer $TOKEN information when accessing this endpoint.

For login with JWT request parameter, the external website has to submit an action with the parameter ?login-token=$TOKEN (See example above).

In both cases, the JWT should contain the following parameters:

sub: The subject, in this case this is the username. Will be used for user matching ifvalidateUseris set.exp: Expiration in Unix epoch time. Can be small as the token is only used during login.name: The full name of the person assigned to this account. Will be used to update user table.roles: String array with roles of user.projects: [Optional] String array with projects of user. Relevant if user hasmanager-role.[2] Usage for JWT Cookie Session Authenticator:

The token must be set within a cookie with a name matching the configured cookieName.

The JWT should then contain the following parameters:

sub: The subject, in this case this is the username. Will be used for user matching ifvalidateUseris set.exp: Expiration in Unix epoch time. Can be small as the token is only used during login.name: The full name of the person assigned to this account. Will be used to update user table.roles: String array with roles of user.

Authorization control

cc-backend uses roles to decide if a user is authorized to access certain

information. The roles and their rights are described in more detail here.

1.6 - Job Archive Handbook

The job archive specifies an exchange format for job meta and performance metric data. It consists of two parts:

- a Json file format

- a Directory hierarchy / Key specification

By using an open, portable and simple specification based on JSON objects it is possible to exchange job performance data for research and analysis purposes as well as use it as a robust way for archiving job performance data.

The current release supports new SQLite and S3 object store based job archive backends. Those are still experimental and for production we still recommend to use the proven file based job archive. One major disadvantage of the file based job archive backend is that for large job counts it will consume a lot of inodes.

Trying the new job-archive backends

We provide the tool archive-manager that allows to convert between different

job-archive formats. This allows to convert your existing file-based job-archive

into either a SQLite or S3 variant. Please be aware that for large archives this

may take a long time. You can find details about how to use this tool in the

archive-manager reference

documentation.

Specification for file path / key

To manage the number of directories within a single directory a tree approach is used splitting the integer job ID. The job id is split in junks of 1000 each. Usually 2 layers of directories is sufficient but the concept can be used for an arbitrary number of layers.

For a 2 layer schema this can be achieved with (code example in Perl):

$level1 = $jobID/1000;

$level2 = $jobID%1000;

$dstPath = sprintf("%s/%s/%d/%03d", $trunk, $destdir, $level1, $level2);

While for the SQLite and S3 object store based backend the systematic to introduce layers is obsolete we kept it to keep the naming consistent. This means what is the path in case of the file based backend is used as a object key and column value there.

Example

For the job ID 1034871 on cluster large with start time 1768978339 the key

is ./large/1034/871/1768978339.

Create a Job archive from scratch

In case you place the job-archive in the ./var folder create the folder with:

mkdir -p ./var/job-archive

The job-archive is versioned, the current version is documented in the Release Notes. Currently you have to create the version file manually when initializing the job-archive:

echo 3 > ./var/job-archive/version.txt

Directory layout

ClusterCockpit supports multiple clusters, for each cluster you need to create a

directory named after the cluster and a cluster.json file specifying the metric

list and hardware partitions within the clusters. Hardware partitions are

subsets of a cluster with homogeneous hardware (CPU type, memory capacity, GPUs)

that are called subclusters in ClusterCockpit.

For above configuration the job archive directory hierarchy looks like the following:

./var/job-archive/

version.txt

fritz/

cluster.json

alex/

cluster.json

woody/

cluster.json

Note

Thecluster.json files currently have to be provided and maintained by the administrator!You find help how-to create a cluster.json file in the How to create a

cluster.json file guide.

Json file format

Overview

Every cluster must be configured in a cluster.json file.

The job data consists of two files:

meta.json: Contains job meta information and job statistics.data.json: Contains complete job data with time series

The description of the json format specification is available as [[json

schema|https://json-schema.org/]] format file. The latest version of the json

schema is part of the cc-backend source tree. For external reference it is

also available in a separate repository.

Specification cluster.json

The json schema specification in its raw format is available at the cc-lib GitHub repository. A variant rendered for better readability is found in the references.

Specification meta.json

The json schema specification in its raw format is available at the cc-lib GitHub repository. A variant rendered for better readability is found in the references.

Specification data.json

The json schema specification in its raw format is available at the cc-lib GitHub repository. A variant rendered for better readability is found in the references.

Metric time series data is stored for a fixed time step. The time step is set

per metric. If no value is available for a metric time series data timestamp

null is entered.

1.7 - Schemas

ClusterCockpit Schema References for

- Application Configuration

- Cluster Configuration

- Job Data

- Job Statistics

- Units

- Job Archive Job Metadata

- Job Archive Job Metricdata

The schemas in their raw form can be found in the ClusterCockpit GitHub repository.

Manual Updates

Changes to the original JSON schemas found in the repository are not automatically rendered in this reference documentation.The raw JSON schemas are parsed and rendered for better readability using the json-schema-for-humans utility.Last Update: 04.12.20241.7.1 - Application Config Schema

A detailed description of each of the application configuration options can be found in the config documentation.

The following schema in its raw form can be found in the ClusterCockpit GitHub repository.

Manual Updates

Changes to the original JSON schema found in the repository are not automatically rendered in this reference documentation.Last Update: 04.12.2024cc-backend configuration file schema

- 1. Property

cc-backend configuration file schema > addr - 2. Property

cc-backend configuration file schema > apiAllowedIPs - 3. Property

cc-backend configuration file schema > user - 4. Property

cc-backend configuration file schema > group - 5. Property

cc-backend configuration file schema > disable-authentication - 6. Property

cc-backend configuration file schema > embed-static-files - 7. Property

cc-backend configuration file schema > static-files - 8. Property

cc-backend configuration file schema > db-driver - 9. Property

cc-backend configuration file schema > db - 10. Property

cc-backend configuration file schema > archive- 10.1. Property

cc-backend configuration file schema > archive > kind - 10.2. Property

cc-backend configuration file schema > archive > path - 10.3. Property

cc-backend configuration file schema > archive > compression - 10.4. Property

cc-backend configuration file schema > archive > retention- 10.4.1. Property

cc-backend configuration file schema > archive > retention > policy - 10.4.2. Property

cc-backend configuration file schema > archive > retention > includeDB - 10.4.3. Property

cc-backend configuration file schema > archive > retention > age - 10.4.4. Property

cc-backend configuration file schema > archive > retention > location

- 10.4.1. Property

- 10.1. Property

- 11. Property

cc-backend configuration file schema > disable-archive - 12. Property

cc-backend configuration file schema > validate - 13. Property

cc-backend configuration file schema > session-max-age - 14. Property

cc-backend configuration file schema > https-cert-file - 15. Property

cc-backend configuration file schema > https-key-file - 16. Property

cc-backend configuration file schema > redirect-http-to - 17. Property

cc-backend configuration file schema > stop-jobs-exceeding-walltime - 18. Property

cc-backend configuration file schema > short-running-jobs-duration - 19. Property

cc-backend configuration file schema > emission-constant - 20. Property

cc-backend configuration file schema > cron-frequency - 21. Property

cc-backend configuration file schema > enable-resampling - 22. Property

cc-backend configuration file schema > jwts- 22.1. Property

cc-backend configuration file schema > jwts > max-age - 22.2. Property

cc-backend configuration file schema > jwts > cookieName - 22.3. Property

cc-backend configuration file schema > jwts > validateUser - 22.4. Property

cc-backend configuration file schema > jwts > trustedIssuer - 22.5. Property

cc-backend configuration file schema > jwts > syncUserOnLogin

- 22.1. Property

- 23. Property

cc-backend configuration file schema > oidc - 24. Property

cc-backend configuration file schema > ldap- 24.1. Property

cc-backend configuration file schema > ldap > url - 24.2. Property

cc-backend configuration file schema > ldap > user_base - 24.3. Property

cc-backend configuration file schema > ldap > search_dn - 24.4. Property

cc-backend configuration file schema > ldap > user_bind - 24.5. Property

cc-backend configuration file schema > ldap > user_filter - 24.6. Property

cc-backend configuration file schema > ldap > username_attr - 24.7. Property

cc-backend configuration file schema > ldap > sync_interval - 24.8. Property

cc-backend configuration file schema > ldap > sync_del_old_users - 24.9. Property

cc-backend configuration file schema > ldap > syncUserOnLogin

- 24.1. Property

- 25. Property

cc-backend configuration file schema > clusters- 25.1. cc-backend configuration file schema > clusters > clusters items

- 25.1.1. Property

cc-backend configuration file schema > clusters > clusters items > name - 25.1.2. Property

cc-backend configuration file schema > clusters > clusters items > metricDataRepository- 25.1.2.1. Property

cc-backend configuration file schema > clusters > clusters items > metricDataRepository > kind - 25.1.2.2. Property

cc-backend configuration file schema > clusters > clusters items > metricDataRepository > url - 25.1.2.3. Property

cc-backend configuration file schema > clusters > clusters items > metricDataRepository > token

- 25.1.2.1. Property

- 25.1.3. Property

cc-backend configuration file schema > clusters > clusters items > filterRanges- 25.1.3.1. Property

cc-backend configuration file schema > clusters > clusters items > filterRanges > numNodes - 25.1.3.2. Property

cc-backend configuration file schema > clusters > clusters items > filterRanges > duration - 25.1.3.3. Property

cc-backend configuration file schema > clusters > clusters items > filterRanges > startTime

- 25.1.3.1. Property

- 25.1.1. Property

- 25.1. cc-backend configuration file schema > clusters > clusters items

- 26. Property

cc-backend configuration file schema > ui-defaults- 26.1. Property

cc-backend configuration file schema > ui-defaults > plot_general_colorBackground - 26.2. Property

cc-backend configuration file schema > ui-defaults > plot_general_lineWidth - 26.3. Property

cc-backend configuration file schema > ui-defaults > plot_list_jobsPerPage - 26.4. Property

cc-backend configuration file schema > ui-defaults > plot_view_plotsPerRow - 26.5. Property

cc-backend configuration file schema > ui-defaults > plot_view_showPolarplot - 26.6. Property

cc-backend configuration file schema > ui-defaults > plot_view_showRoofline - 26.7. Property

cc-backend configuration file schema > ui-defaults > plot_view_showStatTable - 26.8. Property

cc-backend configuration file schema > ui-defaults > system_view_selectedMetric - 26.9. Property

cc-backend configuration file schema > ui-defaults > job_view_showFootprint - 26.10. Property

cc-backend configuration file schema > ui-defaults > job_list_usePaging - 26.11. Property

cc-backend configuration file schema > ui-defaults > analysis_view_histogramMetrics - 26.12. Property

cc-backend configuration file schema > ui-defaults > analysis_view_scatterPlotMetrics - 26.13. Property

cc-backend configuration file schema > ui-defaults > job_view_nodestats_selectedMetrics - 26.14. Property

cc-backend configuration file schema > ui-defaults > job_view_selectedMetrics - 26.15. Property

cc-backend configuration file schema > ui-defaults > plot_general_colorscheme - 26.16. Property

cc-backend configuration file schema > ui-defaults > plot_list_selectedMetrics

- 26.1. Property

Title: cc-backend configuration file schema

| Type | object |

| Required | No |

| Additional properties | Any type allowed |

| Property | Pattern | Type | Deprecated | Definition | Title/Description |

|---|---|---|---|---|---|

| - addr | No | string | No | - | Address where the http (or https) server will listen on (for example: ’localhost:80’). |

| - apiAllowedIPs | No | array of string | No | - | Addresses from which secured API endpoints can be reached |

| - user | No | string | No | - | Drop root permissions once .env was read and the port was taken. Only applicable if using privileged port. |

| - group | No | string | No | - | Drop root permissions once .env was read and the port was taken. Only applicable if using privileged port. |

| - disable-authentication | No | boolean | No | - | Disable authentication (for everything: API, Web-UI, …). |

| - embed-static-files | No | boolean | No | - | If all files in `web/frontend/public` should be served from within the binary itself (they are embedded) or not. |

| - static-files | No | string | No | - | Folder where static assets can be found, if embed-static-files is false. |

| - db-driver | No | enum (of string) | No | - | sqlite3 or mysql (mysql will work for mariadb as well). |

| - db | No | string | No | - | For sqlite3 a filename, for mysql a DSN in this format: https://github.com/go-sql-driver/mysql#dsn-data-source-name (Without query parameters!). |

| - archive | No | object | No | - | Configuration keys for job-archive |

| - disable-archive | No | boolean | No | - | Keep all metric data in the metric data repositories, do not write to the job-archive. |

| - validate | No | boolean | No | - | Validate all input json documents against json schema. |

| - session-max-age | No | string | No | - | Specifies for how long a session shall be valid as a string parsable by time.ParseDuration(). If 0 or empty, the session/token does not expire! |

| - https-cert-file | No | string | No | - | Filepath to SSL certificate. If also https-key-file is set use HTTPS using those certificates. |

| - https-key-file | No | string | No | - | Filepath to SSL key file. If also https-cert-file is set use HTTPS using those certificates. |

| - redirect-http-to | No | string | No | - | If not the empty string and addr does not end in :80, redirect every request incoming at port 80 to that url. |

| - stop-jobs-exceeding-walltime | No | integer | No | - | If not zero, automatically mark jobs as stopped running X seconds longer than their walltime. Only applies if walltime is set for job. |

| - short-running-jobs-duration | No | integer | No | - | Do not show running jobs shorter than X seconds. |

| - emission-constant | No | integer | No | - | . |

| - cron-frequency | No | object | No | - | Frequency of cron job workers. |

| - enable-resampling | No | object | No | - | Enable dynamic zoom in frontend metric plots. |

| + jwts | No | object | No | - | For JWT token authentication. |

| - oidc | No | object | No | - | - |

| - ldap | No | object | No | - | For LDAP Authentication and user synchronisation. |

| + clusters | No | array of object | No | - | Configuration for the clusters to be displayed. |

| - ui-defaults | No | object | No | - | Default configuration for web UI |

1. Property cc-backend configuration file schema > addr

| Type | string |

| Required | No |

Description: Address where the http (or https) server will listen on (for example: ’localhost:80’).

2. Property cc-backend configuration file schema > apiAllowedIPs

| Type | array of string |

| Required | No |

Description: Addresses from which secured API endpoints can be reached

| Array restrictions | |

|---|---|

| Min items | N/A |

| Max items | N/A |

| Items unicity | False |

| Additional items | False |

| Tuple validation | See below |

| Each item of this array must be | Description |

|---|---|

| apiAllowedIPs items | - |

2.1. cc-backend configuration file schema > apiAllowedIPs > apiAllowedIPs items

| Type | string |

| Required | No |

3. Property cc-backend configuration file schema > user

| Type | string |

| Required | No |

Description: Drop root permissions once .env was read and the port was taken. Only applicable if using privileged port.

4. Property cc-backend configuration file schema > group

| Type | string |

| Required | No |

Description: Drop root permissions once .env was read and the port was taken. Only applicable if using privileged port.

5. Property cc-backend configuration file schema > disable-authentication

| Type | boolean |

| Required | No |

Description: Disable authentication (for everything: API, Web-UI, …).

6. Property cc-backend configuration file schema > embed-static-files

| Type | boolean |

| Required | No |

Description: If all files in web/frontend/public should be served from within the binary itself (they are embedded) or not.

7. Property cc-backend configuration file schema > static-files

| Type | string |

| Required | No |

Description: Folder where static assets can be found, if embed-static-files is false.

8. Property cc-backend configuration file schema > db-driver

| Type | enum (of string) |

| Required | No |

Description: sqlite3 or mysql (mysql will work for mariadb as well).

Must be one of:

- “sqlite3”

- “mysql”

9. Property cc-backend configuration file schema > db

| Type | string |

| Required | No |

Description: For sqlite3 a filename, for mysql a DSN in this format: https://github.com/go-sql-driver/mysql#dsn-data-source-name (Without query parameters!).

10. Property cc-backend configuration file schema > archive

| Type | object |

| Required | No |

| Additional properties | Any type allowed |

Description: Configuration keys for job-archive

| Property | Pattern | Type | Deprecated | Definition | Title/Description |

|---|---|---|---|---|---|

| + kind | No | enum (of string) | No | - | Backend type for job-archive |

| - path | No | string | No | - | Path to job archive for file backend |

| - compression | No | integer | No | - | Setup automatic compression for jobs older than number of days |

| - retention | No | object | No | - | Configuration keys for retention |

10.1. Property cc-backend configuration file schema > archive > kind

| Type | enum (of string) |

| Required | Yes |

Description: Backend type for job-archive

Must be one of:

- “file”

- “s3”

10.2. Property cc-backend configuration file schema > archive > path

| Type | string |

| Required | No |

Description: Path to job archive for file backend

10.3. Property cc-backend configuration file schema > archive > compression

| Type | integer |

| Required | No |

Description: Setup automatic compression for jobs older than number of days

10.4. Property cc-backend configuration file schema > archive > retention

| Type | object |

| Required | No |

| Additional properties | Any type allowed |

Description: Configuration keys for retention

| Property | Pattern | Type | Deprecated | Definition | Title/Description |

|---|---|---|---|---|---|

| + policy | No | enum (of string) | No | - | Retention policy |

| - includeDB | No | boolean | No | - | Also remove jobs from database |

| - age | No | integer | No | - | Act on jobs with startTime older than age (in days) |

| - location | No | string | No | - | The target directory for retention. Only applicable for retention move. |

10.4.1. Property cc-backend configuration file schema > archive > retention > policy

| Type | enum (of string) |

| Required | Yes |

Description: Retention policy

Must be one of:

- “none”

- “delete”

- “move”

10.4.2. Property cc-backend configuration file schema > archive > retention > includeDB

| Type | boolean |

| Required | No |

Description: Also remove jobs from database

10.4.3. Property cc-backend configuration file schema > archive > retention > age

| Type | integer |

| Required | No |

Description: Act on jobs with startTime older than age (in days)

10.4.4. Property cc-backend configuration file schema > archive > retention > location

| Type | string |

| Required | No |

Description: The target directory for retention. Only applicable for retention move.

11. Property cc-backend configuration file schema > disable-archive

| Type | boolean |

| Required | No |

Description: Keep all metric data in the metric data repositories, do not write to the job-archive.

12. Property cc-backend configuration file schema > validate

| Type | boolean |

| Required | No |

Description: Validate all input json documents against json schema.

13. Property cc-backend configuration file schema > session-max-age

| Type | string |

| Required | No |

Description: Specifies for how long a session shall be valid as a string parsable by time.ParseDuration(). If 0 or empty, the session/token does not expire!

14. Property cc-backend configuration file schema > https-cert-file

| Type | string |

| Required | No |

Description: Filepath to SSL certificate. If also https-key-file is set use HTTPS using those certificates.

15. Property cc-backend configuration file schema > https-key-file

| Type | string |

| Required | No |

Description: Filepath to SSL key file. If also https-cert-file is set use HTTPS using those certificates.

16. Property cc-backend configuration file schema > redirect-http-to

| Type | string |

| Required | No |

Description: If not the empty string and addr does not end in :80, redirect every request incoming at port 80 to that url.

17. Property cc-backend configuration file schema > stop-jobs-exceeding-walltime

| Type | integer |

| Required | No |

Description: If not zero, automatically mark jobs as stopped running X seconds longer than their walltime. Only applies if walltime is set for job.

18. Property cc-backend configuration file schema > short-running-jobs-duration

| Type | integer |

| Required | No |

Description: Do not show running jobs shorter than X seconds.

19. Property cc-backend configuration file schema > emission-constant

| Type | integer |

| Required | No |

Description: .

20. Property cc-backend configuration file schema > cron-frequency

| Type | object |

| Required | No |

| Additional properties | Any type allowed |

Description: Frequency of cron job workers.

| Property | Pattern | Type | Deprecated | Definition | Title/Description |

|---|---|---|---|---|---|

| - duration-worker | No | string | No | - | Duration Update Worker [Defaults to ‘5m’] |

| - footprint-worker | No | string | No | - | Metric-Footprint Update Worker [Defaults to ‘10m’] |

20.1. Property cc-backend configuration file schema > cron-frequency > duration-worker

| Type | string |

| Required | No |

Description: Duration Update Worker [Defaults to ‘5m’]

20.2. Property cc-backend configuration file schema > cron-frequency > footprint-worker

| Type | string |

| Required | No |

Description: Metric-Footprint Update Worker [Defaults to ‘10m’]

21. Property cc-backend configuration file schema > enable-resampling

| Type | object |

| Required | No |

| Additional properties | Any type allowed |

Description: Enable dynamic zoom in frontend metric plots.

| Property | Pattern | Type | Deprecated | Definition | Title/Description |

|---|---|---|---|---|---|

| + trigger | No | integer | No | - | Trigger next zoom level at less than this many visible datapoints. |

| + resolutions | No | array of integer | No | - | Array of resampling target resolutions, in seconds. |

21.1. Property cc-backend configuration file schema > enable-resampling > trigger

| Type | integer |

| Required | Yes |

Description: Trigger next zoom level at less than this many visible datapoints.

21.2. Property cc-backend configuration file schema > enable-resampling > resolutions

| Type | array of integer |

| Required | Yes |

Description: Array of resampling target resolutions, in seconds.

| Array restrictions | |

|---|---|

| Min items | N/A |

| Max items | N/A |

| Items unicity | False |

| Additional items | False |

| Tuple validation | See below |

| Each item of this array must be | Description |

|---|---|

| resolutions items | - |

21.2.1. cc-backend configuration file schema > enable-resampling > resolutions > resolutions items

| Type | integer |

| Required | No |

22. Property cc-backend configuration file schema > jwts

| Type | object |

| Required | Yes |

| Additional properties | Any type allowed |

Description: For JWT token authentication.

| Property | Pattern | Type | Deprecated | Definition | Title/Description |

|---|---|---|---|---|---|

| + max-age | No | string | No | - | Configure how long a token is valid. As string parsable by time.ParseDuration() |

| - cookieName | No | string | No | - | Cookie that should be checked for a JWT token. |

| - validateUser | No | boolean | No | - | Deny login for users not in database (but defined in JWT). Overwrite roles in JWT with database roles. |

| - trustedIssuer | No | string | No | - | Issuer that should be accepted when validating external JWTs |

| - syncUserOnLogin | No | boolean | No | - | Add non-existent user to DB at login attempt with values provided in JWT. |

22.1. Property cc-backend configuration file schema > jwts > max-age

| Type | string |

| Required | Yes |

Description: Configure how long a token is valid. As string parsable by time.ParseDuration()

22.2. Property cc-backend configuration file schema > jwts > cookieName

| Type | string |

| Required | No |

Description: Cookie that should be checked for a JWT token.

22.3. Property cc-backend configuration file schema > jwts > validateUser

| Type | boolean |

| Required | No |

Description: Deny login for users not in database (but defined in JWT). Overwrite roles in JWT with database roles.

22.4. Property cc-backend configuration file schema > jwts > trustedIssuer

| Type | string |

| Required | No |

Description: Issuer that should be accepted when validating external JWTs

22.5. Property cc-backend configuration file schema > jwts > syncUserOnLogin

| Type | boolean |

| Required | No |

Description: Add non-existent user to DB at login attempt with values provided in JWT.

23. Property cc-backend configuration file schema > oidc

| Type | object |

| Required | No |

| Additional properties | Any type allowed |

23.1. The following properties are required

- provider

24. Property cc-backend configuration file schema > ldap

| Type | object |

| Required | No |

| Additional properties | Any type allowed |

Description: For LDAP Authentication and user synchronisation.

| Property | Pattern | Type | Deprecated | Definition | Title/Description |

|---|---|---|---|---|---|

| + url | No | string | No | - | URL of LDAP directory server. |

| + user_base | No | string | No | - | Base DN of user tree root. |

| + search_dn | No | string | No | - | DN for authenticating LDAP admin account with general read rights. |

| + user_bind | No | string | No | - | Expression used to authenticate users via LDAP bind. Must contain uid={username}. |

| + user_filter | No | string | No | - | Filter to extract users for syncing. |

| - username_attr | No | string | No | - | Attribute with full username. Default: gecos |

| - sync_interval | No | string | No | - | Interval used for syncing local user table with LDAP directory. Parsed using time.ParseDuration. |

| - sync_del_old_users | No | boolean | No | - | Delete obsolete users in database. |

| - syncUserOnLogin | No | boolean | No | - | Add non-existent user to DB at login attempt if user exists in Ldap directory |

24.1. Property cc-backend configuration file schema > ldap > url

| Type | string |

| Required | Yes |

Description: URL of LDAP directory server.

24.2. Property cc-backend configuration file schema > ldap > user_base

| Type | string |

| Required | Yes |

Description: Base DN of user tree root.

24.3. Property cc-backend configuration file schema > ldap > search_dn

| Type | string |

| Required | Yes |

Description: DN for authenticating LDAP admin account with general read rights.

24.4. Property cc-backend configuration file schema > ldap > user_bind

| Type | string |

| Required | Yes |

Description: Expression used to authenticate users via LDAP bind. Must contain uid={username}.

24.5. Property cc-backend configuration file schema > ldap > user_filter

| Type | string |

| Required | Yes |

Description: Filter to extract users for syncing.

24.6. Property cc-backend configuration file schema > ldap > username_attr

| Type | string |

| Required | No |

Description: Attribute with full username. Default: gecos

24.7. Property cc-backend configuration file schema > ldap > sync_interval

| Type | string |

| Required | No |

Description: Interval used for syncing local user table with LDAP directory. Parsed using time.ParseDuration.

24.8. Property cc-backend configuration file schema > ldap > sync_del_old_users

| Type | boolean |

| Required | No |

Description: Delete obsolete users in database.

24.9. Property cc-backend configuration file schema > ldap > syncUserOnLogin

| Type | boolean |

| Required | No |

Description: Add non-existent user to DB at login attempt if user exists in Ldap directory

25. Property cc-backend configuration file schema > clusters

| Type | array of object |

| Required | Yes |

Description: Configuration for the clusters to be displayed.

| Array restrictions | |

|---|---|

| Min items | N/A |

| Max items | N/A |

| Items unicity | False |

| Additional items | False |

| Tuple validation | See below |

| Each item of this array must be | Description |

|---|---|

| clusters items | - |

25.1. cc-backend configuration file schema > clusters > clusters items

| Type | object |

| Required | No |

| Additional properties | Any type allowed |

| Property | Pattern | Type | Deprecated | Definition | Title/Description |

|---|---|---|---|---|---|

| + name | No | string | No | - | The name of the cluster. |

| + metricDataRepository | No | object | No | - | Type of the metric data repository for this cluster |

| + filterRanges | No | object | No | - | This option controls the slider ranges for the UI controls of numNodes, duration, and startTime. |

25.1.1. Property cc-backend configuration file schema > clusters > clusters items > name

| Type | string |

| Required | Yes |

Description: The name of the cluster.

25.1.2. Property cc-backend configuration file schema > clusters > clusters items > metricDataRepository

| Type | object |

| Required | Yes |

| Additional properties | Any type allowed |

Description: Type of the metric data repository for this cluster

| Property | Pattern | Type | Deprecated | Definition | Title/Description |

|---|---|---|---|---|---|

| + kind | No | enum (of string) | No | - | - |

| + url | No | string | No | - | - |

| - token | No | string | No | - | - |

25.1.2.1. Property cc-backend configuration file schema > clusters > clusters items > metricDataRepository > kind

| Type | enum (of string) |

| Required | Yes |

Must be one of:

- “influxdb”

- “prometheus”

- “cc-metric-store”

- “test”

25.1.2.2. Property cc-backend configuration file schema > clusters > clusters items > metricDataRepository > url

| Type | string |

| Required | Yes |

25.1.2.3. Property cc-backend configuration file schema > clusters > clusters items > metricDataRepository > token

| Type | string |

| Required | No |

25.1.3. Property cc-backend configuration file schema > clusters > clusters items > filterRanges

| Type | object |

| Required | Yes |

| Additional properties | Any type allowed |

Description: This option controls the slider ranges for the UI controls of numNodes, duration, and startTime.

| Property | Pattern | Type | Deprecated | Definition | Title/Description |

|---|---|---|---|---|---|

| + numNodes | No | object | No | - | UI slider range for number of nodes |

| + duration | No | object | No | - | UI slider range for duration |

| + startTime | No | object | No | - | UI slider range for start time |

25.1.3.1. Property cc-backend configuration file schema > clusters > clusters items > filterRanges > numNodes

| Type | object |

| Required | Yes |

| Additional properties | Any type allowed |

Description: UI slider range for number of nodes

| Property | Pattern | Type | Deprecated | Definition | Title/Description |

|---|---|---|---|---|---|

| + from | No | integer | No | - | - |

| + to | No | integer | No | - | - |

25.1.3.1.1. Property cc-backend configuration file schema > clusters > clusters items > filterRanges > numNodes > from

| Type | integer |

| Required | Yes |

25.1.3.1.2. Property cc-backend configuration file schema > clusters > clusters items > filterRanges > numNodes > to

| Type | integer |

| Required | Yes |

25.1.3.2. Property cc-backend configuration file schema > clusters > clusters items > filterRanges > duration

| Type | object |

| Required | Yes |

| Additional properties | Any type allowed |

Description: UI slider range for duration

| Property | Pattern | Type | Deprecated | Definition | Title/Description |

|---|---|---|---|---|---|

| + from | No | integer | No | - | - |

| + to | No | integer | No | - | - |

25.1.3.2.1. Property cc-backend configuration file schema > clusters > clusters items > filterRanges > duration > from

| Type | integer |

| Required | Yes |

25.1.3.2.2. Property cc-backend configuration file schema > clusters > clusters items > filterRanges > duration > to

| Type | integer |

| Required | Yes |

25.1.3.3. Property cc-backend configuration file schema > clusters > clusters items > filterRanges > startTime

| Type | object |

| Required | Yes |

| Additional properties | Any type allowed |

Description: UI slider range for start time

| Property | Pattern | Type | Deprecated | Definition | Title/Description |

|---|---|---|---|---|---|

| + from | No | string | No | - | - |

| + to | No | null | No | - | - |

25.1.3.3.1. Property cc-backend configuration file schema > clusters > clusters items > filterRanges > startTime > from

| Type | string |

| Required | Yes |

| Format | date-time |

25.1.3.3.2. Property cc-backend configuration file schema > clusters > clusters items > filterRanges > startTime > to

| Type | null |

| Required | Yes |

26. Property cc-backend configuration file schema > ui-defaults

| Type | object |

| Required | No |

| Additional properties | Any type allowed |

Description: Default configuration for web UI

| Property | Pattern | Type | Deprecated | Definition | Title/Description |

|---|---|---|---|---|---|

| + plot_general_colorBackground | No | boolean | No | - | Color plot background according to job average threshold limits |

| + plot_general_lineWidth | No | integer | No | - | Initial linewidth |

| + plot_list_jobsPerPage | No | integer | No | - | Jobs shown per page in job lists |

| + plot_view_plotsPerRow | No | integer | No | - | Number of plots per row in single job view |

| + plot_view_showPolarplot | No | boolean | No | - | Option to toggle polar plot in single job view |

| + plot_view_showRoofline | No | boolean | No | - | Option to toggle roofline plot in single job view |

| + plot_view_showStatTable | No | boolean | No | - | Option to toggle the node statistic table in single job view |

| + system_view_selectedMetric | No | string | No | - | Initial metric shown in system view |

| + job_view_showFootprint | No | boolean | No | - | Option to toggle footprint ui in single job view |

| + job_list_usePaging | No | boolean | No | - | Option to switch from continous scroll to paging |

| + analysis_view_histogramMetrics | No | array of string | No | - | Metrics to show as job count histograms in analysis view |

| + analysis_view_scatterPlotMetrics | No | array of array | No | - | Initial scatter plto configuration in analysis view |

| + job_view_nodestats_selectedMetrics | No | array of string | No | - | Initial metrics shown in node statistics table of single job view |

| + job_view_selectedMetrics | No | array of string | No | - | - |

| + plot_general_colorscheme | No | array of string | No | - | Initial color scheme |

| + plot_list_selectedMetrics | No | array of string | No | - | Initial metric plots shown in jobs lists |

26.1. Property cc-backend configuration file schema > ui-defaults > plot_general_colorBackground

| Type | boolean |

| Required | Yes |

Description: Color plot background according to job average threshold limits

26.2. Property cc-backend configuration file schema > ui-defaults > plot_general_lineWidth

| Type | integer |

| Required | Yes |

Description: Initial linewidth

26.3. Property cc-backend configuration file schema > ui-defaults > plot_list_jobsPerPage

| Type | integer |

| Required | Yes |

Description: Jobs shown per page in job lists

26.4. Property cc-backend configuration file schema > ui-defaults > plot_view_plotsPerRow

| Type | integer |

| Required | Yes |

Description: Number of plots per row in single job view

26.5. Property cc-backend configuration file schema > ui-defaults > plot_view_showPolarplot

| Type | boolean |

| Required | Yes |

Description: Option to toggle polar plot in single job view

26.6. Property cc-backend configuration file schema > ui-defaults > plot_view_showRoofline

| Type | boolean |

| Required | Yes |

Description: Option to toggle roofline plot in single job view

26.7. Property cc-backend configuration file schema > ui-defaults > plot_view_showStatTable

| Type | boolean |

| Required | Yes |

Description: Option to toggle the node statistic table in single job view

26.8. Property cc-backend configuration file schema > ui-defaults > system_view_selectedMetric

| Type | string |

| Required | Yes |

Description: Initial metric shown in system view

26.9. Property cc-backend configuration file schema > ui-defaults > job_view_showFootprint

| Type | boolean |

| Required | Yes |

Description: Option to toggle footprint ui in single job view

26.10. Property cc-backend configuration file schema > ui-defaults > job_list_usePaging

| Type | boolean |

| Required | Yes |

Description: Option to switch from continous scroll to paging

26.11. Property cc-backend configuration file schema > ui-defaults > analysis_view_histogramMetrics

| Type | array of string |

| Required | Yes |

Description: Metrics to show as job count histograms in analysis view

| Array restrictions | |

|---|---|

| Min items | N/A |

| Max items | N/A |

| Items unicity | False |

| Additional items | False |

| Tuple validation | See below |

| Each item of this array must be | Description |

|---|---|

| analysis_view_histogramMetrics items | - |

26.11.1. cc-backend configuration file schema > ui-defaults > analysis_view_histogramMetrics > analysis_view_histogramMetrics items

| Type | string |

| Required | No |

26.12. Property cc-backend configuration file schema > ui-defaults > analysis_view_scatterPlotMetrics

| Type | array of array |

| Required | Yes |

Description: Initial scatter plto configuration in analysis view

| Array restrictions | |

|---|---|

| Min items | N/A |

| Max items | N/A |

| Items unicity | False |

| Additional items | False |

| Tuple validation | See below |

| Each item of this array must be | Description |

|---|---|

| analysis_view_scatterPlotMetrics items | - |

26.12.1. cc-backend configuration file schema > ui-defaults > analysis_view_scatterPlotMetrics > analysis_view_scatterPlotMetrics items

| Type | array of string |

| Required | No |

| Array restrictions | |

|---|---|

| Min items | 1 |

| Max items | N/A |

| Items unicity | False |

| Additional items | False |

| Tuple validation | See below |

| Each item of this array must be | Description |

|---|---|

| analysis_view_scatterPlotMetrics items items | - |

26.12.1.1. cc-backend configuration file schema > ui-defaults > analysis_view_scatterPlotMetrics > analysis_view_scatterPlotMetrics items > analysis_view_scatterPlotMetrics items items

| Type | string |

| Required | No |

26.13. Property cc-backend configuration file schema > ui-defaults > job_view_nodestats_selectedMetrics

| Type | array of string |

| Required | Yes |

Description: Initial metrics shown in node statistics table of single job view

| Array restrictions | |

|---|---|

| Min items | N/A |

| Max items | N/A |

| Items unicity | False |

| Additional items | False |

| Tuple validation | See below |

| Each item of this array must be | Description |

|---|---|

| job_view_nodestats_selectedMetrics items | - |

26.13.1. cc-backend configuration file schema > ui-defaults > job_view_nodestats_selectedMetrics > job_view_nodestats_selectedMetrics items

| Type | string |

| Required | No |

26.14. Property cc-backend configuration file schema > ui-defaults > job_view_selectedMetrics

| Type | array of string |

| Required | Yes |

| Array restrictions | |

|---|---|

| Min items | N/A |

| Max items | N/A |

| Items unicity | False |

| Additional items | False |

| Tuple validation | See below |

| Each item of this array must be | Description |

|---|---|

| job_view_selectedMetrics items | - |

26.14.1. cc-backend configuration file schema > ui-defaults > job_view_selectedMetrics > job_view_selectedMetrics items

| Type | string |

| Required | No |

26.15. Property cc-backend configuration file schema > ui-defaults > plot_general_colorscheme

| Type | array of string |

| Required | Yes |

Description: Initial color scheme

| Array restrictions | |

|---|---|

| Min items | N/A |

| Max items | N/A |

| Items unicity | False |

| Additional items | False |

| Tuple validation | See below |

| Each item of this array must be | Description |

|---|---|

| plot_general_colorscheme items | - |

26.15.1. cc-backend configuration file schema > ui-defaults > plot_general_colorscheme > plot_general_colorscheme items

| Type | string |

| Required | No |

26.16. Property cc-backend configuration file schema > ui-defaults > plot_list_selectedMetrics

| Type | array of string |

| Required | Yes |

Description: Initial metric plots shown in jobs lists

| Array restrictions | |

|---|---|

| Min items | N/A |

| Max items | N/A |

| Items unicity | False |

| Additional items | False |

| Tuple validation | See below |

| Each item of this array must be | Description |

|---|---|

| plot_list_selectedMetrics items | - |

26.16.1. cc-backend configuration file schema > ui-defaults > plot_list_selectedMetrics > plot_list_selectedMetrics items

| Type | string |

| Required | No |

Generated using json-schema-for-humans on 2024-12-04 at 16:45:59 +0100

1.7.2 - Cluster Schema

The following schema in its raw form can be found in the ClusterCockpit GitHub repository.

Manual Updates

Changes to the original JSON schema found in the repository are not automatically rendered in this reference documentation.Last Update: 04.12.2024HPC cluster description

- 1. Property

HPC cluster description > name - 2. Property

HPC cluster description > metricConfig- 2.1. HPC cluster description > metricConfig > metricConfig items

- 2.1.1. Property

HPC cluster description > metricConfig > metricConfig items > name - 2.1.2. Property

HPC cluster description > metricConfig > metricConfig items > unit - 2.1.3. Property

HPC cluster description > metricConfig > metricConfig items > scope - 2.1.4. Property

HPC cluster description > metricConfig > metricConfig items > timestep - 2.1.5. Property

HPC cluster description > metricConfig > metricConfig items > aggregation - 2.1.6. Property

HPC cluster description > metricConfig > metricConfig items > footprint - 2.1.7. Property

HPC cluster description > metricConfig > metricConfig items > energy - 2.1.8. Property

HPC cluster description > metricConfig > metricConfig items > lowerIsBetter - 2.1.9. Property

HPC cluster description > metricConfig > metricConfig items > peak - 2.1.10. Property

HPC cluster description > metricConfig > metricConfig items > normal - 2.1.11. Property

HPC cluster description > metricConfig > metricConfig items > caution - 2.1.12. Property

HPC cluster description > metricConfig > metricConfig items > alert - 2.1.13. Property